Background

I’ve recently been diving into the world of web scraping at work. “Web scraping” refers to extracting information from web pages. Web scraping using jQuery is a simple way to extract targeted information using client-side scripts that can be easily integrated into existing web applications. jQuery is a free JavaScript library that allows the developer to easily search for (query) elements on a webpage using search strings. The library also includes many other powerful features; see www.jQuery.com for the official documentation.

In order to ‘scrape’ a webpage, you first need to request the page itself. The jQuery load() function accomplishes this using an Ajax HTTP request, and can even extract specific page fragments based on a query. The jQuery website includes an important note that there is a browser restriction that confines Ajax requests to data on the same domain, sub-domain and protocol. This is a deal-breaker for some applications, but it worked for me, as I needed to access data from the same sub-domain. At work, I wanted to do two things with jQuery: 1) obtain a variable ID for a .csv file in a storefront ordering system, and 2) load the file from the file’s URL, which is based on the unique ID number. I had to do this within a 3rd party platform that was hosted offsite, so being able to find a solution that could be integrated with a preexisting web page was vitally important.

Web Scraping Using PHP and jQuery Programming, Twitter Code January 19, 2013 October 11, 2015 I was asked by a friend to write code that would scrape a DLP website’s content of letters to use in an academic study (the website’s copyright allows for the non-commercial use of the data). One case down, two to go. Let's move on to covering scraping HTML that's rendered by the web server in Case 2. Case 2 – Server-side Rendered HTML. Besides getting data asynchronously via an API, another common technique used by web servers is to render the data directly into the HTML before serving the page up.

Solution

I first used the jQuery load() function to access a storefront order page that contained the variable ID for the .csv file. Inside of the URL parameter of the load() call, you can add a space character followed by any jQuery selector string in order to return a page fragment instead of the entire page. The entire page is still fetched, so you’re not saving time or bandwidth in the actual page request, but returning only the fragment you’re interested in saves time after the page is retrieved. Note that, according to the jQuery site, if you do add a selector to the URL parameter of the load() call, scripts inside of the loaded page will not execute. You would want the scripts to execute if, for example, you were loading a webpage into an HTML element and you wanted it to display the entire page with scripts. I added a search string selector for my purposes, ‘input[name=“csvid”]’, which loaded the page and then selected and returned only the input control with a name attribute equal to “csvid”:

Once I obtained the .csv identifier from the input control’s value attribute on the order page, I could make a jQuery get() call to load the .csv file itself. The jQuery get() function uses an Ajax HTTP GET request.

Upon successfully downloading the file using jQuery’s get() function, the callback function is executed. This is where I further processed the file as needed.

Conclusions

jQuery is a powerful tool that can be used for web scraping within a sub-domain for client-side applications. Using the load() and get() functions is a fairly straightforward way to download content from a matching sub-domain for use within the browser. Once downloaded, selectors offer a powerful way to access specific information from the loaded data. All of this can be done from a single .html file, making it an ideal choice for developers working within the confines of a 3rd party platform.

Analog Forest 🌳

October 19, 2020

If you’ll try to google “web scraping tutorial” you’ll get a bunch of tech articles on the subject that tells you how to achieve the result using python. The toolkit is pretty standard for these posts: python 3 (hopefully not second) as an engine, requests library for fetching, and Beautiful Soup 4 (which is 6 years old) for web parsing.

I’ve also seen few articles where they teach you how to parse HTML content with regular expressions, spoiler: don’t do this. Gemini driver download for windows 10.

The problem is that I’ve seen articles like this 5 years ago and this stack hasn’t mostly changed. And more importantly, the solution is not native to javascript developers. If you would like to use technologies you are more familiar with, like ES2020, node, and browser APIs you will miss the direct guidance.

I’ve tried to fill the spot and create ‘the missing doc’.

Overview

Check if data is available in request

Before you will start to do any of the programming, always check for the easiest available way. In our case, it would be a direct network request for data.

Open developer tools - F12 in most browsers - then switch to the Network tab and reload the page

If data is not baked in the HTML like it is in half of the modern web applications, there is a good chance that you don’t need to scrape and parse at all.

If you are not so lucky and still need to do the scraping, here is the general overview of the process:

- fetch the page with the required data

- extract the data from the page markup to some in-language structure (Object, Array, Set)

- process the data: filter it, transform it to your needs, prepare it for the future usage

- save the data: write it to the database or dump it to the filesystem

That would be the easiest case for parsing, in sophisticated ones you can bump into some pagination, link navigation, dealing with bot protection (captcha), and even real-time site interaction. But all this wouldn’t be covered in the current guide, sorry.

Fetching

As an example of this guide, we will scrape a goal data for Messi from Transfermarkt. You can check his stats on the site. To load the page from the node environment you will need to use your favorite request library. You can also use the raw HTTP/S module, but it doesn’t even support async, so I’ve picked node-fetch for this task. Your code will look something like:

Tools for parsing

There are two major alternatives for this task which are conveniently represented with two high-quality most-starred and alive libraries.

The first approach is just to build a syntax tree from a markup text and then navigate it with familiar browser-like syntax. This one is fully covered with cheerio that declared as jQuery for server (IMO, they need to revise their marketing vibes for 2020).

The second way is to build the whole browser DOM but without a browser itself. We can do this with wonderful jsdom which is node.js implementation of many web standards.

Let’s take a closer look at both of them.

cheerio

Despite these analogies cheerio doesn’t have jQuery in dependencies, it just tries to reimplement most known methods from scratch:

Basic usage is really easy:

you load a HTML

done, you’re great, now you can use JQ selectors/methods

Probably you can pick this one if you need to save on size (cheerio is lightweight and fast) or you are really familiar with jQuery syntax and for some reason want to bring it to your new project. Cheerio is a nice way to do any kind of work with HTML you need in your application.

jsdom

This one is a bit more complicated: it tries to emulate part of the whole browser that is working with HTML and JS (apart from rendering the result). It’s used heavily for testing and … well scraping.

Let’s spin up jsdom:

you need to use a constructor with your HTML

then you can access standard browser API

jsdom is a lot heavier and it does a lot more job. You should understand, why to choose it over other options.

Parsing

In our example, I want to stick to the jsdom. It will help us to show one last approach at the end of the article. The parsing part is really vital but very short.

So we’ll start with building a dom from the fetched HTML:

Then you can select table content with css selector and browser API. Don’t forget to create a real array from NodeList that querySelectorAll returns.

Now you have a two-dimensional array to work with. This part is finished, now you need to process this data to get clean and ready-to-work-with stats.

Processing

First, let’s check the lengths of our rows. Each row is the stat about goal and we mostly don’t care how many do we have. But each row can contain numbers in a different format so we have to deal with them.

We map over rows and get the length. Then we deduplicate results to see what options do we have here.

Not that bad, only 4 different shapes: with 1, 5, 14, and 15 cells.

Since we don’t need rank data from extra cell in 15-cells case it is safe to delete it.

Row with only one cell is actually useless: it is just a name of the season, so we will skip it.

For the 5-cells case (when player scored few goals in one match) we need to find previous full row and use it’s data for empty stats.

Now we just have to manually map data to keys, nothing scientific here and no smart way to avoid it.

Saving

Xhrs

We would just dump our result to a file, converting it to a string first with the JSON.stringify method.

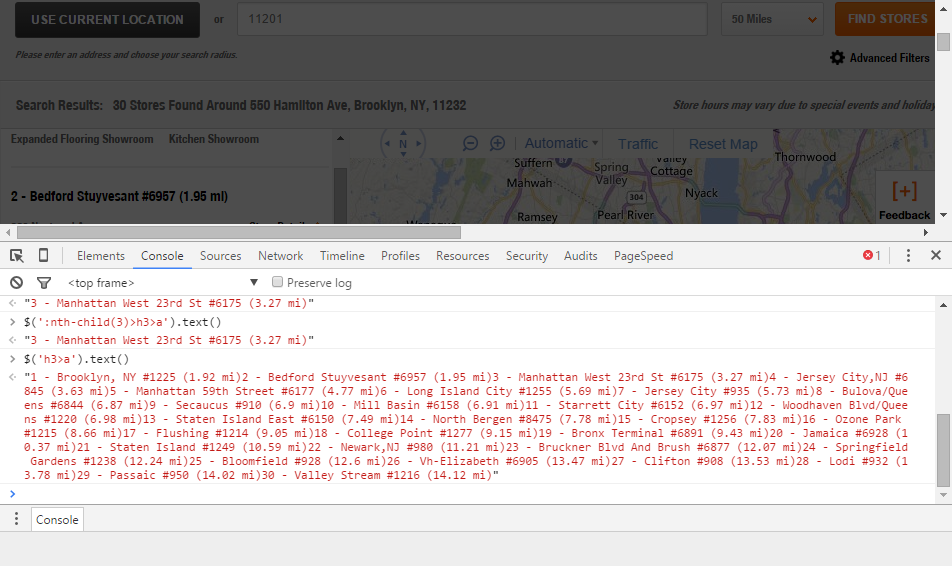

Bonus: One-time parsing with a snippet

Since we used jsdom with the browser-compatible API we actually don’t need any node environment to parse the data. If we just need it once from a particular page we can just run some code at the Console tab in the developers tools of your browser. Try to open any player stats on Transfermarkt and paste this giant non-readable snippet to the console:

Drivers eicon networks. And now just apply this magic copy function that is integrated into the browser devtools. It would copy data to your clipboard.

Not that hard, right? And no need to deal with pip anymore. I hope you found this article useful. Stay tuned, next time we will visualize this scraped data with modern JS libs.

You can find whole script for this article in the following codesandbox:

How To Screen Scrape

What Is Web Scraping

by Pavel Prokudin. I write about web-development and modern technologies. Follow me onTwitter